Sponsor: ARL Vertical Lift Research Center of Excellence (VLRCoE)

Investigators: PI: Inder Chopra (UMD); Co-PI: Sean Humbert (UMD)

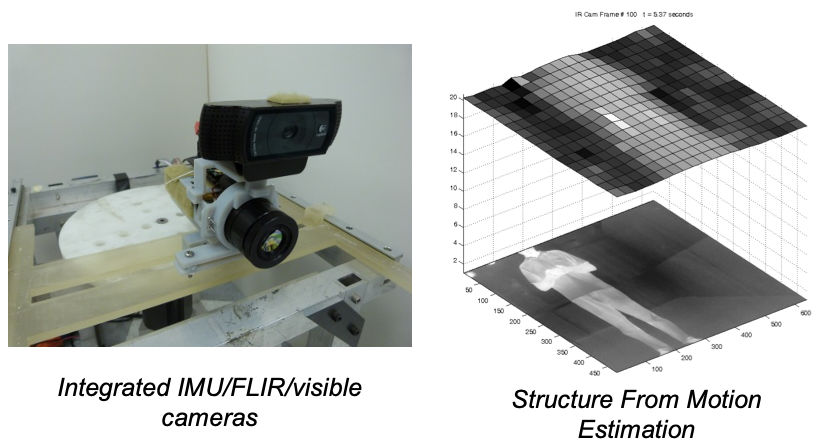

Adverse visibility conditions and atmospheric obscurants, including fog, rain, smoke, dust, low light, and darkness, significantly limit the operational capabilities and combat effectiveness of rotorcraft. These conditions result in a reduction of visual cues that typically assist pilots in mission-critical tasks such as landing, hovering, and terrain following. Several technologies have been employed to assist in overcoming these operational barriers, including the Forward Looking Infrared sensors (FLIR), Terrain Following/Terrain Avoidance (TF/TA) radar and Low Light Level Television (LLLTV). Instruments such as the LANTIRN, as equipped on the heavy-lift vehicle MH-53 Pave Low, combine these sensors to provide useful visual imagery to the pilot in low-visibility conditions so that obstacles can be monitored (FLIR), or navigational cues to the vehicle’s control system for terrain following (TF/TA radar).

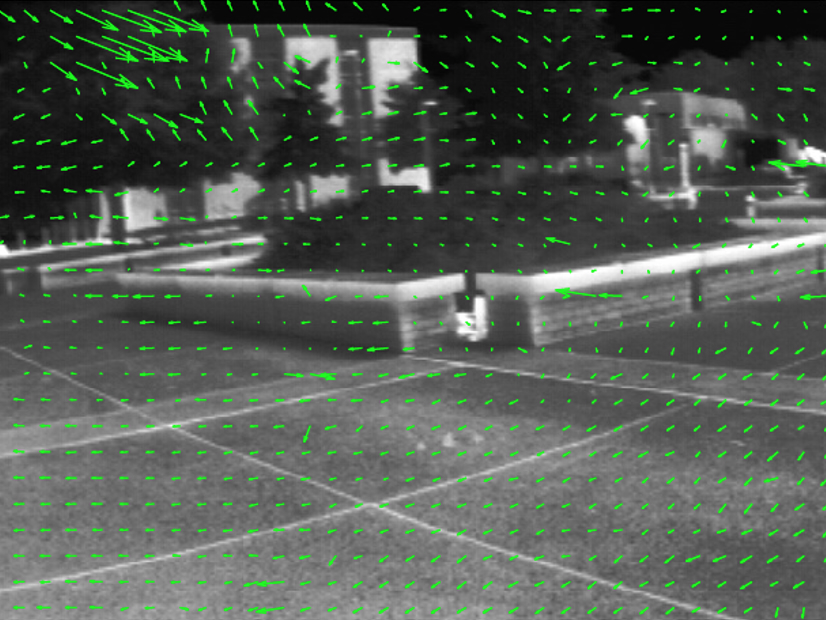

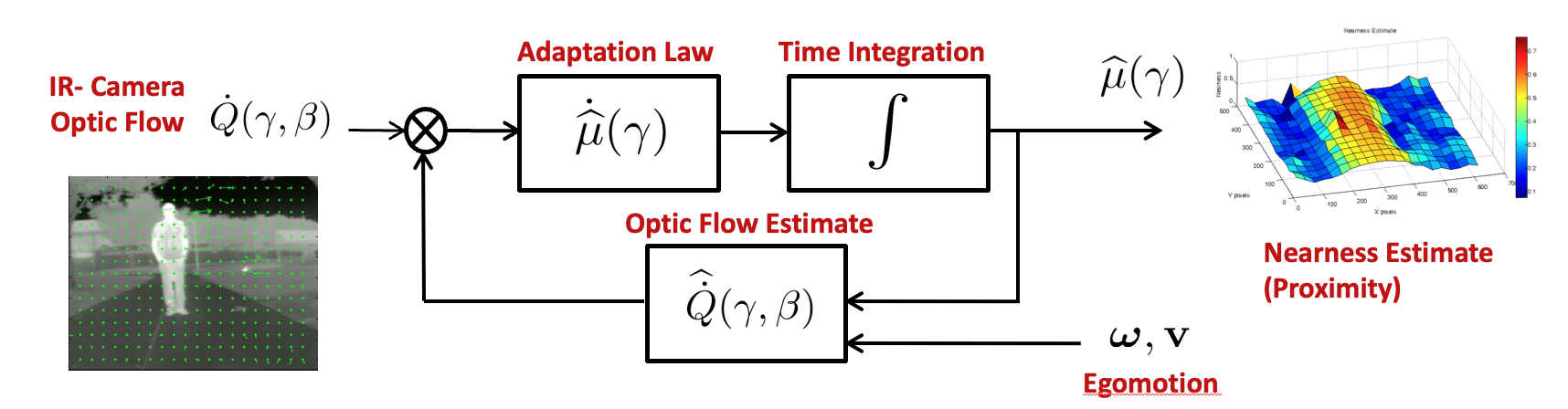

The FLIR sensor provides the pilot with an infrared image of the environment, which typically outperforms visible imaging in degraded visibility conditions. The apparent motion cues, available from the moving infrared imagery (or optic flow), can help the pilot make critical decisions regarding obstacle avoidance. However, directly observing an estimated motion field to interpret proximity is inefficient as it increases pilot workload disproportionally to the benefit attained. Alternatively, the estimated optic flow can be combined with knowledge of the self motion of the vehicle to provide an estimate of environmental structure within the imaged area, which would be a useful addition to aid a pilot’s situational awareness. A direct estimate of environmental structure (proximity), in the form of a processed “depth” image, would allow the pilot to position the vehicle relative to observed obstacles as necessary, even in white-out or brown-out conditions, so long as the imager is able to penetrate the obfuscations. Furthermore, this approach would permit automated or assisted landing capabilities even in GPS-denied environments.Publications

- Keshavan J and Humbert JS, “Structure-Independent Motion Recovery from a Monocular Image Sequence with Low Fill Fraction,” International Journal of Robust and Nonlinear Control, 2017, DOI:10.1002/rnc.3879.

- Keshavan J and Humbert JS, “Robust Structure and Motion Recovery for Monocular Vision Systems with Noisy Measurements,” International Journal of Control, 2017 DOI:10.1080/00207179.2017.1291997.

- Keshavan J and Humbert JS, “An Optical Flow-Based Solution to the Problem of Range Identification in Perspective Vision Systems,” Journal of Intelligent and Robotic Systems, Vol. 85, No: 3-4, pp. 651-662, 2016, DOI: 10.1007/s10846-016-0404-6.

- Keshavan J, Escobar-Alvarez H and Humbert JS, “An Adaptive Observer Framework for Accurate Feature Depth Estimation Using an Uncalibrated Monocular Camera,” Journal of Control Engineering Practice, Vol. 46, pp. 59-65, 2016, DOI: 10.1016/j.conengprac.2015.10.005.

- Keshavan J and Humbert JS, “Partial Aircraft State Recovery from Visual Motion in Unstructured Environments,” AIAA SciTech Guidance Navigation and Control Conference, Florida, 2018.

- Keshavan J and Humbert JS, “Robust Motion Recovery from Noisy Stereoscopic Measurements,” IEEE American Control Conference (ACC), Wisconsin, June 2018.

- Keshavan J and Humbert JS, “An Analytically Stable Structure and Motion Observer Based On Monocular Vision,” Journal of Intelligent and Robotic Systems, Vol. 86, No. 3-4, pp. 495-510, 2017, DOI: 10.1007/s10846-017-0470-4.

- Keshavan, J and Humbert JS, “Range Identification Using an Uncalibrated Monocular Camera, American Control Conference, Seattle, WA, May 2017.

- Keshavan J, Escobar-Alvarez H, Dimble KD, Humbert JS, Goerzen, CL and Whalley MS, “Application of a Nonlinear Recursive Observer for Accurate Visual Depth Estimation from UH-60 Flight Data,” AIAA J. of Guidance, Control and Dynamics, Vol. 39, No. 7, pp. 1501-1512, 2016, DOI: 10.2514/1.G001450.

- Dimble KD, Escobar-Alvarez HD, Ranganathan BN, Conroy JK and Humbert JS, “3D Depth Estimation for Helicopter Landing Site Visualization in Environments with Degraded Visibility,” 6th AHS International Specialists Meeting On Unmanned Rotorcraft Systems, Chandler, AZ, January 2015.